What poses the greatest threat to democracy and digital freedom today?¶

Ask yourself this: are we focusing more on compliance because it is measurable, reportable, and easy to present in a boardroom while ignoring the deeper, more chaotic cyber threats that truly undermine democracy? When politicians openly fire intelligence officials, dismiss hacking evidence, or cast doubt on the electoral process, it raises a hard question: which is more dangerous, the technical overreach by corporations, political destabilization by populists, or covert attacks by state-backed hackers?

This is not a hypothetical. In the real world, AI tools quietly scrape data in the background. Political leaders rewrite reality in 280 characters. And somewhere in the dark, an APT group quietly moves through a government network undetected.

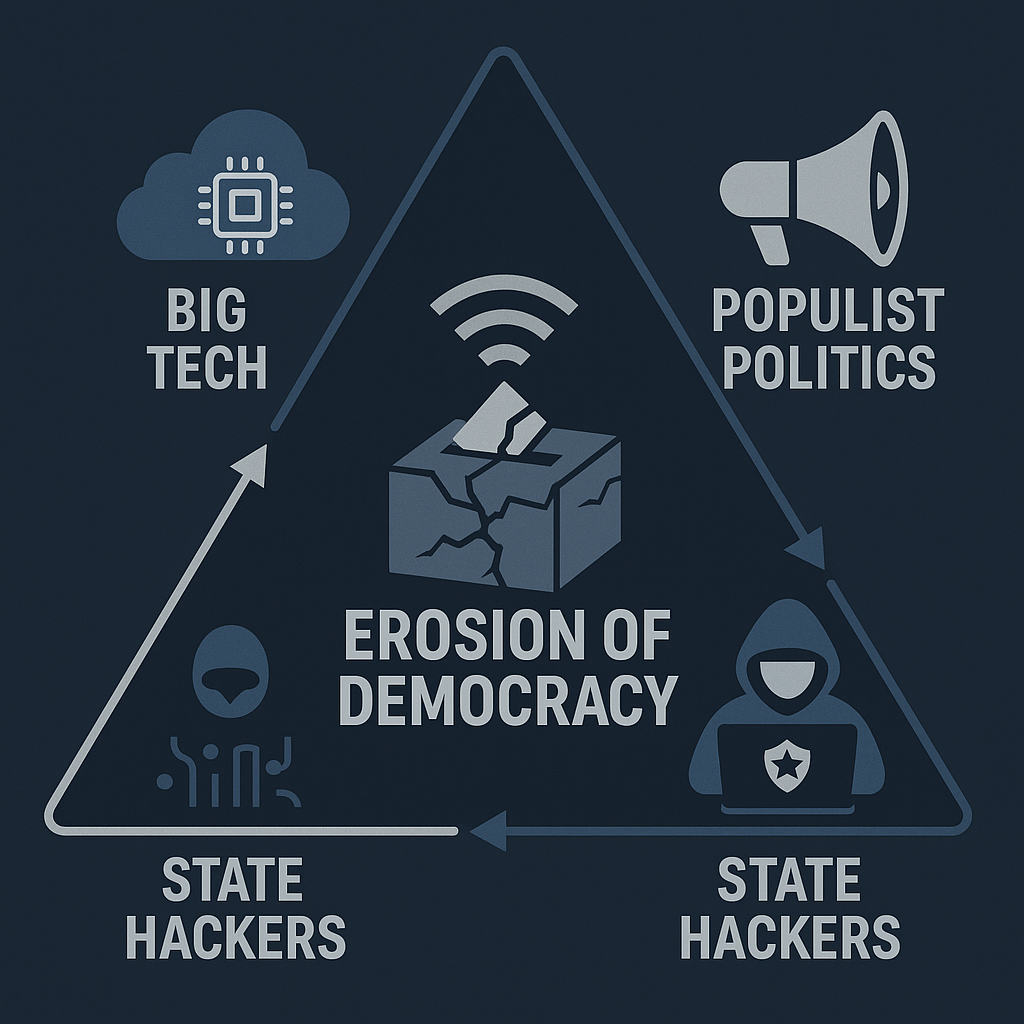

What is worse: the slow erosion of digital rights under corporate compliance regimes, or the blunt force trauma of populistdriven disinformation? Or is the real threat how all three forces, Big Tech, populist politics, and state hackers, converge and reinforce each other?

Big Tech surveillance and cloud leading position¶

Major tech companies operate vast cloud infrastructures and increasingly advanced AI systems. Microsoft, among others, has faced scrutiny for internal policies and product features that hint at overreach. In May 2025, the company barred employees from using a Chinese AI app (DeepSeek), citing fears that sensitive information could “go back to China” or that the tool might generate biased content.2

Microsoft’s own AI tool, Copilot, raised similar concerns. Analysts warned about Copilot’s wide permissions across Microsoft 365, creating risk of over-permissioning and inadvertent data exposure (e.g. IP, financials, personal information), especially if access controls aren’t strict.3

Even more controversial is Recall, Microsoft’s AI-powered screen history feature introduced in 2025. Initially designed to capture snapshots of everything viewed on a PC, Recall was widely criticized for behaving like spyware. It took unfiltered screenshots every few seconds, including passwords and sensitive data.3 After widespread backlash, Microsoft revised Recall to be opt-in, encrypt data locally, and limit corporate deployment, but recent investigations still show it captures private data and fails to filter credit card numbers and passwords in many cases.34

Additionally, Microsoft's internal censorship practices came under fire when employees discovered that internal emails containing words like “Palestine” or “Gaza” were being blocked. Microsoft later admitted to filtering politically charged terms during internal protests, prompting accusations of algorithmic censorship and silencing dissent.4

The cloud dominance of a few U.S. tech giants introduces another risk: centralization. Global reliance on U.S.-based infrastructure enables potential mass surveillance, not just by corporations, but by intelligence agencies with access. Microsoft’s cloud platform is reportedly used by militaries, including the Israeli military during recent Gaza operations, which sparked ethical protests from employees in early 2025.5

Example of overreach: centralized AI tools collecting massive datasets across organizations, with risks of unintended exposure, corporate censorship, and indirect participation in military activity.

Motives and methods: Tech giants frame their actions as legal, security-driven, and market-standard. But methods include highly centralized cloud infrastructure, proprietary AI platforms, and strict usage policies that disproportionately affect employee and user freedom.

Long-term impact: Overconcentration leads to surveillance, policy influence, and information choke points. Centralized control over digital tools enables a few firms to shape public discourse, suppress topics, or enable state actors through indirect service usage.

Populist political forces¶

Populist leaders, such as Donald Trump, have demonstrated how digital platforms can be weaponized for disinformation and institutional erosion. The 2020 U.S. election aftermath was a warning, false claims of voter fraud undermined trust in democratic systems. In cybersecurity matters, populist narratives further complicate threat attribution. For example, in the 2020 SolarWinds hack, attributed to Russian intelligence (APT29/Cozy Bear), President Trump cast doubt and publicly suggested China might be responsible, contradicting his own intelligence agencies.5

Example of manipulation: populist leaders use social media to propagate “alternative facts,” echo chambers, memes, and even deepfakes. The speed and virality of false information make it harder for facts to regain traction.

Motives and methods: Populist tactics revolve around direct communication, distrust of institutions, and emotional language. By framing news and facts as elite propaganda, populists bypass traditional media and flood digital channels with biased content.

Long-term impact: The erosion of consensus around shared facts leads to institutional paralysis. Courts, press, and democratic bodies lose credibility. Polarization deepens, and democratic norms are replaced by loyalty tests and misinformation bubbles.

Hostile cyber actors¶

State-sponsored hacker groups remain the most direct and operational threat to both digital freedom and democratic stability. The SolarWinds breach remains a case study in scale and subtlety. The Russian-linked APT29 group inserted malicious code into a routine software update from SolarWinds, giving them access to thousands of networks globally, including the U.S. Treasury and Commerce Departments.1

The breach remained undetected for months, allowing espionage, lateral movement, and data theft. It illustrated the fragility of digital trust. It was a trusted update mechanism became the entry point for widespread surveillance.

Example of cyberattack: SolarWinds demonstrated how a supply-chain compromise can impact governments, businesses, and nonprofits in one sweep. Attackers used admin tools to blend in and exfiltrate sensitive information undetected.

Motives and methods: Nation-state groups often pursue long-term intelligence collection, infrastructure compromise, and psychological influence. Common methods include phishing, zero-day exploits, APTs, and malware embedded in trusted systems. These operations are typically low-noise, high-impact.

Long-term impact: Systemic compromise weakens trust in software, government, and even democracy itself. Elections, power grids, and financial systems may be at risk, while retaliation becomes difficult due to the ambiguity of digital attribution and proportional response.

Which threat is most dangerous?¶

The core argument still holds: it’s not about ranking threats, but recognizing convergence.

- Big Tech enables global-scale information control.

- Populists exploit that infrastructure to spread division.

- State actors exploit confusion to compromise critical systems.

While compliance frameworks chase measurable controls, these three actors reinforce each other in ways that evade dashboards and policy audits.

We defend the visible (logs, retention, alerts), but the real damage happens in plain sight, in the quiet capture of screen data, the normalization of misinformation, and the slow burn of breached systems left unpatched.

The biggest risk is missing the intersection of corporate, political, and adversarial influence.

Personal reflection and call to vigilance¶

Protecting digital freedom now requires three parallel tracks:

- Big Tech accountability: push for transparency, algorithmic oversight, decentralization, and open standards. Legislation must catch up with AI and cloud centralization.

- Civic resilience: invest in media literacy, restore truth in political discourse, and protect journalistic and institutional independence.

- Cyber defense and norms: strengthen infrastructure, promote international cyber norms, and create mechanisms to respond to hybrid warfare below the kinetic threshold.

This is a vigilance moment. Not a crisis of despair, but of responsibility. What we tolerate today will shape the norms of tomorrow.

Summary comparison¶

| Force | Key risk | Primary methods | Long term hazard |

|---|---|---|---|

| Big Tech Surveillance | Privacy erosion; information control | Centralized cloud platforms; AI-driven data monitoring; content moderation | Censorship, mass surveillance, corporate influence over policy |

| Populist Politics | Disinformation; institutional distrust | Social media propaganda; cult of personality; undermining facts | Polarization, democratic backsliding, normalizing of political violence |

| Hostile Cyber Actors | Espionage; infrastructure disruption | Supply-chain hacks; malware & APTs; ransomware | Systemic chaos, economic damage, escalation into real-world conflict |

The future of digital democracy depends on our ability to see all three threats as interconnected. No threat acts alone, each feeds the next.